|

|

||

|---|---|---|

| pics | ||

| .gitignore | ||

| LICENSE-CC | ||

| LICENSE-GPL | ||

| README.md | ||

| wut | ||

| wut-api-test | ||

| wut-get-obs | ||

| wut-get-staging | ||

| wut-get-train-bad | ||

| wut-get-train-good | ||

| wut-get-validation-bad | ||

| wut-get-validation-good | ||

| wut-get-waterfall | ||

| wut-get-waterfall-range | ||

| wut-ml | ||

| wut-review-staging | ||

README.md

satnogs-wut

The goal of satnogs-wut is to have a script that will take an observation ID and return an answer whether the observation is "good", "bad", or "failed".

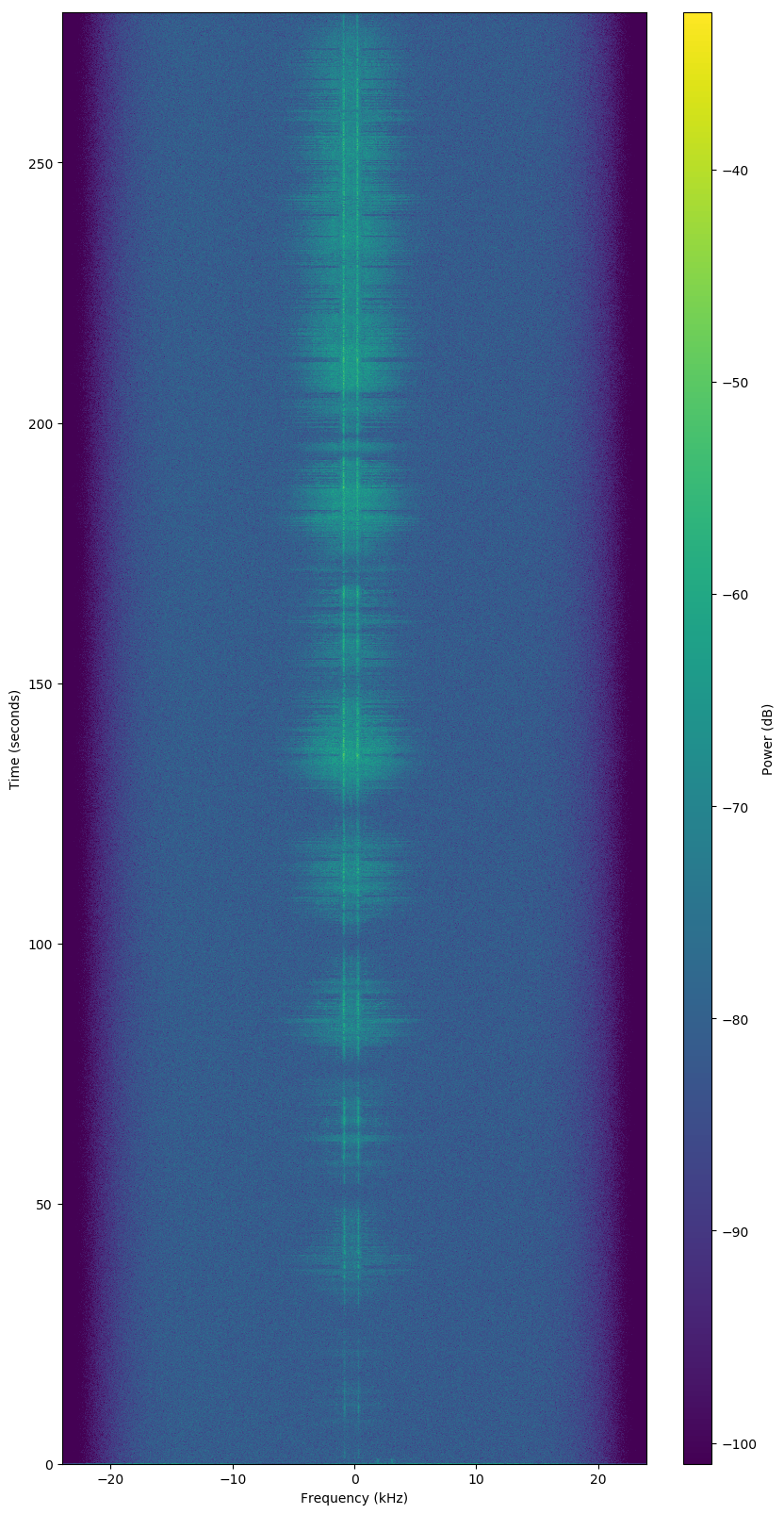

Good Observation

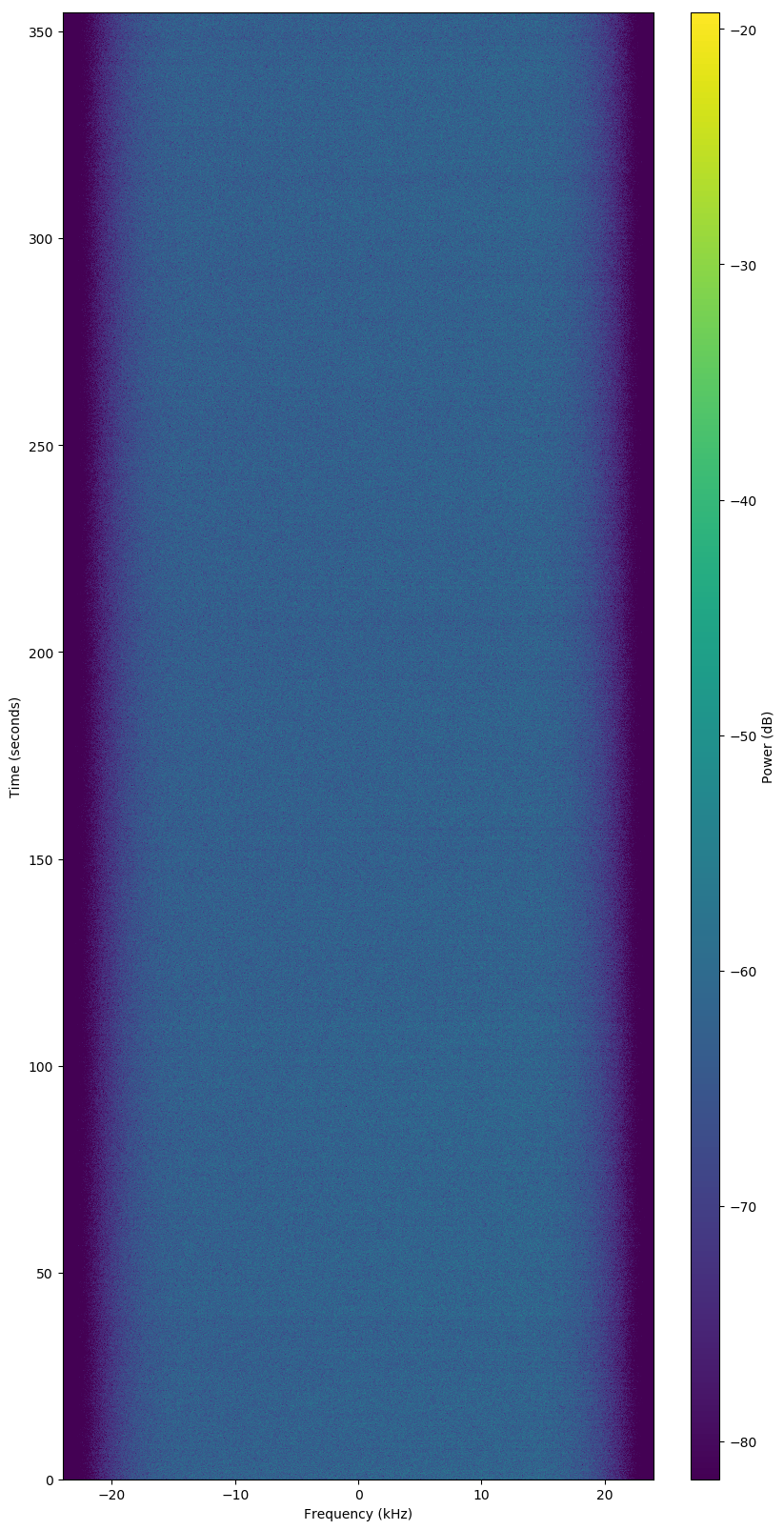

Bad Observation

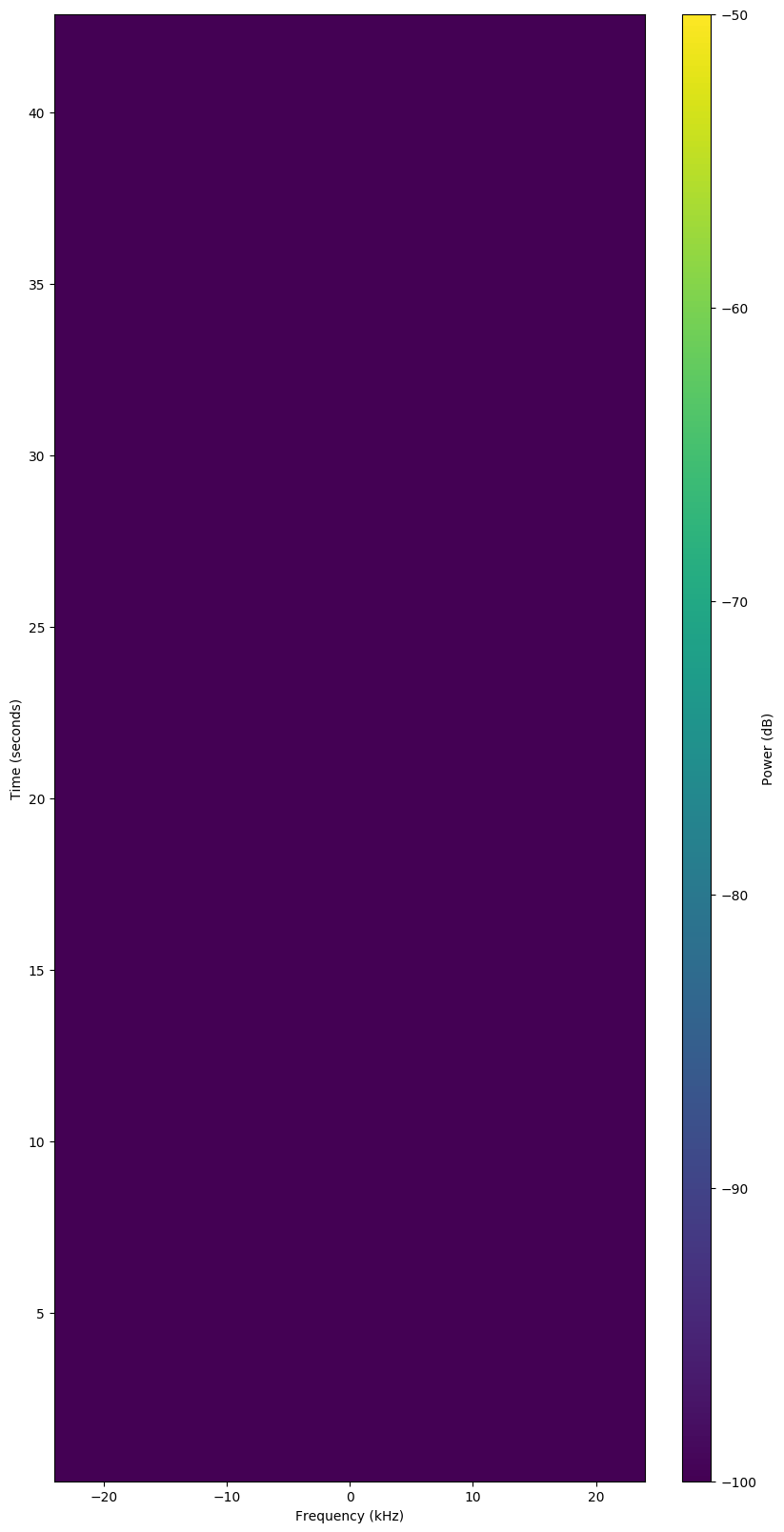

Failed Observation

Machine Learning

The system at present is build upon the following:

- Debian

- Tensorflow

- Keras

Learning/Testing, results are inaccurate.

wut?

The following scripts are in the repo:

wut--- Feed it an observation ID and it returns if it is a "good", "bad", or "failed" observation.wut-api-test--- API Tests.wut-get-obs--- Download the JSON for an observation ID.wut-get-staging--- Download waterfalls todata/stagingfor review (deprecated).wut-get-train-bad--- Download waterfalls todata/train/badfor review (deprecated).wut-get-train-good--- Download waterfalls todata/train/goodfor review (deprecated).wut-get-validation-bad--- Download waterfalls todata/validation/badfor review (deprecated).wut-get-validation-good--- Download waterfalls todata/validation/goodfor review (deprecated).wut-get-waterfall--- Download waterfall for an observation ID todownload/[ID].wut-get-waterfall-range--- Download waterfalls for a range of observation IDs todownload/[ID].wut-ml--- Main machine learning Python script using Tensorflow and Keras.wut-review-staging--- Review all images indata/staging.

Installation

Most of the scripts are simple shell scripts with few dependencies.

Setup

The scripts use files that are ignored in the git repo. So you need to create those directories:

mkdir -p download

mkdir -p data/train/good

mkdir -p data/train/bad

mkdir -p data/train/failed

mkdir -p data/validation/good

mkdir -p data/validataion/bad

mkdir -p data/validataion/failed

mkdir -p data/staging

mkdir -p data/test/unvetted

Debian Packages

You'll need curl and jq, both in Debian's repos.

apt update

apt install curl jq

Machine Learning

For the machine learning scripts, like wut-ml, both Tensorflow

and Keras need to be installed. The versions of those in Debian

didn't work for me. IIRC, for Tensorflow I built a pip of

version 2.0.0 from git and installed that. I installed Keras

from pip. Something like:

# XXX These aren't the exact commands, need to check...

# Install bazel or whatever their build system is

# Install Tensorflow

git clone tensorflow...

cd tensorflow

./configure

# run some bazel command

dpkg -i /tmp/pkg_foo/*.deb

apt update

apt -f install

# Install Keras

pip3 install --user keras

# A million other commands....

Usage

The main purpose of the script is to evaluate an observation, but to do that, it needs to build a corpus of observations to learn from. So many of the scripts in this repo are just for downloading and managing observations.

The following steps need to be performed:

-

Download waterfalls and JSON descriptions with

wut-get-waterfall-range. These get put in thedownloads/[ID]/directories. -

Organize downloaded waterfalls into categories (e.g. "good", "bad", "failed"). Note: this needs a script written. Put them into their respective directories under:

data/train/good/data/train/bad/data/train/failed/data/validation/good/data/validataion/bad/data/validataion/failed/

-

Use machine learning script

wut-mlto build a model based on the files in thedata/trainanddata/validationdirectories. -

Rate an observation using the

wutscript.

Caveats

This is the first machine learning script I've done, I know little about satellites and less about radio, and I'm not a programmer.

Source License / Copying

Main repository is available here:

License: CC By SA 4.0 International and/or GPLv3+ at your discretion. Other code licensed under their own respective licenses.

Copyright (C) 2019, 2020, Jeff Moe